Most modern programming languages simplify multi-threading and parallelism by offering asynchronous computation functionality. This functionality allows a programmer to run certain logic in other/multiple threads and retrieve the results of this logic with ease. Examples of asynchronous computation are JavaScript’s async methods or Go’s goroutines.

When implementing a program, logic such as long-running computations or complex I/O operations can be spun off in other threads, allowing the main logic of a program to continue running.

Design

To show the inner workings of some of these asynchronous computation methods, I have implemented the following:

- runAsync: Schedules a piece of code to be run asynchronously, that is, without affecting the flow of the thread that’s calling it.

- Promise: Class that represents a value that will be available in the future, it ‘promises’ that the result of an asynchronous function will eventually be available.

- Thread pool: Creating one thread per asynchronously run function can cause a lot of overhead. A thread pool has been implemented to limit the number of threads present at any given time.

Implementation

As listed above, several classes and functions will have to be implemented to allow for asynchronously running code, with results that can be easily accessed through Promise objects.

runAsync

The main building block that will have to be implemented is the runAsync function, which takes in logic (another function) to be executed asynchronously and returns a Promise object, which ‘promises’ to eventually make the results of this logic available.

The signature for this function is as follows:

template <typename T>

<Promise<T>> runAsync(const std::function<T()>& func) {

…

}

During the execution of this function, the following steps are taken:

- Create a new Promise object, to be returned to the caller.

- Wrap the asynchronous logic in another function such that it can run on the thread pool. This wrapped function does the following:

- Run the given logic.

- Place the result of this logic in the Promise object.

- Place this wrapped function in a queue for threads within the thread pool to read from and execute.

- Return the Promise object to the caller.

While this is done, threads within the thread pool will take these wrapped functions from the queue and execute them asynchronously. As multiple threads are reading from and writing to this queue, concurrency control through the use of locks is done to prevent race conditions.

Promise

After calling runAsync, a Promise object provides a handle/reference to the result of the user’s function. The Promise object itself contains two methods:

- getValue: Blocks the calling thread until the result is available, then returns the result.

- setValue: Sets the result.

As these functions are called in a multi-threaded environment, locks within these functions prevent race conditions from happening.

Analysis

From a programmer’s perspective, running asynchronous logic will look like this:

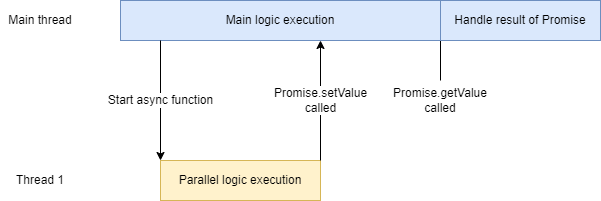

Note that this is an ideal case where the main thread does not ‘wait’ for the asynchronous function to finish.

In situations where the main thread has to wait for the asynchronous function to finish, the following less efficient execution happens:

Even though the main thread is blocked on the result of the asynchronous computation, it is still more efficient than running all logic in one thread.

The source code for the asynchronous computation functions can be found at: https://github.com/daankolthof/async-computation.